Day 4: Stemming and Lemmatization

Naveen

Naveen- 0

Introduction

Natural Language Processing (NLP) plays a critical role in understanding and processing human language. This blog discusses stemming and lemmatization, essential text normalization techniques in NLP.

What is NLP and Its Components?

NLP is an AI-based method of interacting with systems using natural language. It involves several steps: tokenization, lemmatization, POS tagging, named entity recognition, and chunking. Among these, stemming and lemmatization are crucial for text preprocessing.

Text Mining in NLP

Text mining, also known as text analytics, involves deriving meaningful insights from textual data. This process converts unstructured text into structured data for analysis through NLP techniques.

What are Stemming and Lemmatization?

Both techniques normalize text to prepare it for further processing:

- Stemming reduces words to their root form by removing suffixes, even if the result isn’t a valid word.

- Lemmatization brings words to their base or dictionary form, ensuring grammatical correctness.

These techniques are widely used in search engine optimization (SEO), web search indexing, tagging systems, and information retrieval.

Stemming: Overview and Techniques

Stemming maps words with similar meanings to a common root form. Common algorithms include:

- Porter Stemmer: A rule-based algorithm developed in 1979 that uses suffix stripping. It’s simple, fast, and ideal for information retrieval but may produce non-linguistic stems.

- Lancaster Stemmer: A more aggressive algorithm that generates shorter stems. However, it may lead to over-stemming, creating stems with no meaning.

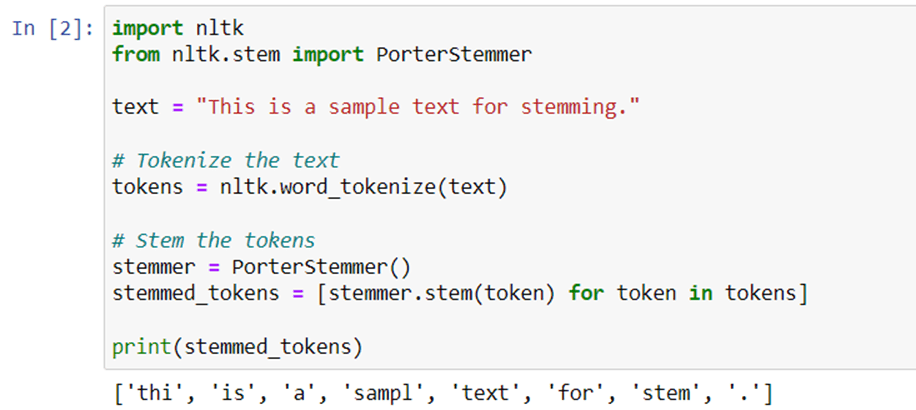

Examples in Python Using NLTK

The NLTK (Natural Language Toolkit) library provides tools to implement stemming:

- Porter Stemmer Example: Reduces “connected,” “connection,” and “connecting” to “connect.”

- Lancaster Stemmer Example: Generates even shorter stems, such as “destabilized” to “dest.”

Stemming is the process of reducing a word to its root or stem form. For example, the stem of the word “running” is “run”. Stemming algorithms are based on rules and heuristics that remove affixes and suffixes to generate the stem of the word. Stemming is a relatively simple and fast process that can be applied to large amounts of text data.

Let’s see an example of Stemming in Python:

However, stemming has some limitations. For example, it can generate stems that are not actual words, which can affect the accuracy of NLP tasks such as text classification and sentiment analysis. Therefore, lemmatization is often preferred over stemming for more accurate and precise results.

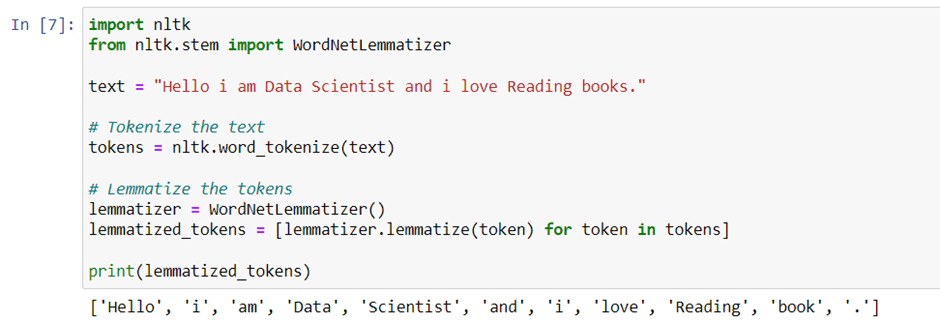

(adsbygoogle = window.adsbygoogle || []).push({});Lemmatization: An Advanced Approach

Unlike stemming, lemmatization considers the word’s context and ensures that the output is meaningful. For example, “running” and “ran” are lemmatized to “run.”

Applications of Stemming and Lemmatization

- Search Engines: Helps match queries with relevant documents.

- Tagging Systems: Assigns common tags to content with different word forms.

Stemming vs. Lemmatization: Key Differences

- Output: Stemming often produces non-words, while lemmatization ensures valid words.

- Speed: Stemming is faster but less accurate.

- Use Case: Lemmatization is better for applications requiring grammatical accuracy.

Lemmatization produces more meaningful and accurate results than stemming, but it can be slower and more computationally expensive, especially when dealing with large amounts of text data.

(adsbygoogle = window.adsbygoogle || []).push({});Conclusion

Stemming and lemmatization are important techniques for pre-processing text data in NLP tasks. Stemming is a simple and fast process that can be applied to large amounts of text data, but it may not produce actual words. Lemmatization, on the other hand, is a more accurate and precise process that takes into consideration the context of the word and its grammar, but it may be slower and more computationally expensive. The choice of technique depends on the specific needs of the NLP task at hand.

Day 3: Tokenization and stopword removal

Day 5: Part-of-Speech Tagging and Named Entity Recognition

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts