Computer Vision for Beginners – Part 4

Naveen

Naveen- 0

In this article, I will be discussing about various algorithms of image feature detection, description using OpenCV.

Introduction

What do humans typically do when they see the image?

He will be able to recognize the faces which are there inside the images. So, in a simple form, computer vision is what allows computers to see and process visual data just like humans. Computer vision involves analyzing images to produce useful information.

What is a feature?

To identify an apple from a picture, you’ll need something that tells you exactly what it is.

According to the shape and texture, the apple is a ripe one. By analyzing it’s color, we can say that this particular apple is red.

The clues which are used to identify or recognize an image are called features of an image. In the same way, computer functions, to detect various features in an image.

We will discuss some of the algorithms of the OpenCV library that are used to detect features.

Feature Detection Algorithms

- Harris Corner Detection

The Harris corner detection algorithm is used to detect corners in an input image. It is widely used in computer vision and robotics applications.

- It’s important to determine where there is a large change in the intensity of colors within the image, so the algorithm can choose a color to vary. This applies to different feature such as corners, edges or colors.

- For each window identified, compute a score value, R.

- Threshold the score and mark up the corners.

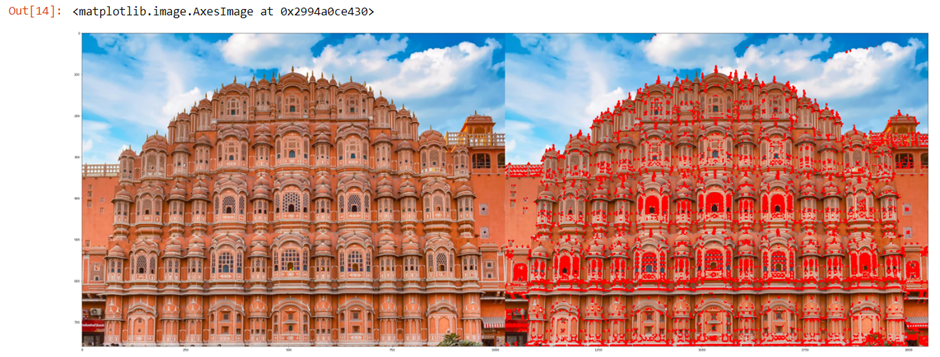

- Shi-Tomasi Corner Detector

This algorithm is similar to Harris corner detection, but the value of R has been computed differently. Our algorithm can find the best n corners in an image as it is designed specifically for that function.

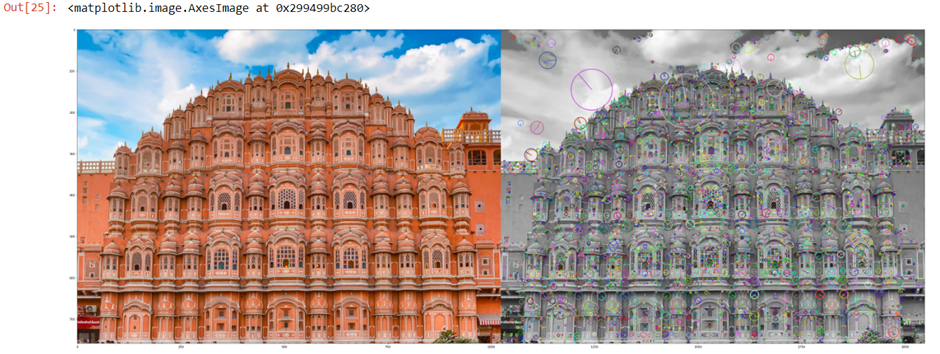

- Scale-Invariant Feature Transform (SIFT)

SIFT, an image processing tool, is typically used for detecting corners, blobs and circles. It is also helpful for scaling an image.

The Harris corner detector, Shi-Tomasi corner detector and SIFT algorithm all fail in this case. But the SIFT algorithm plays a vital role here. It can detect features from the image that could not be detected by other methods, regardless of its size or orientation.

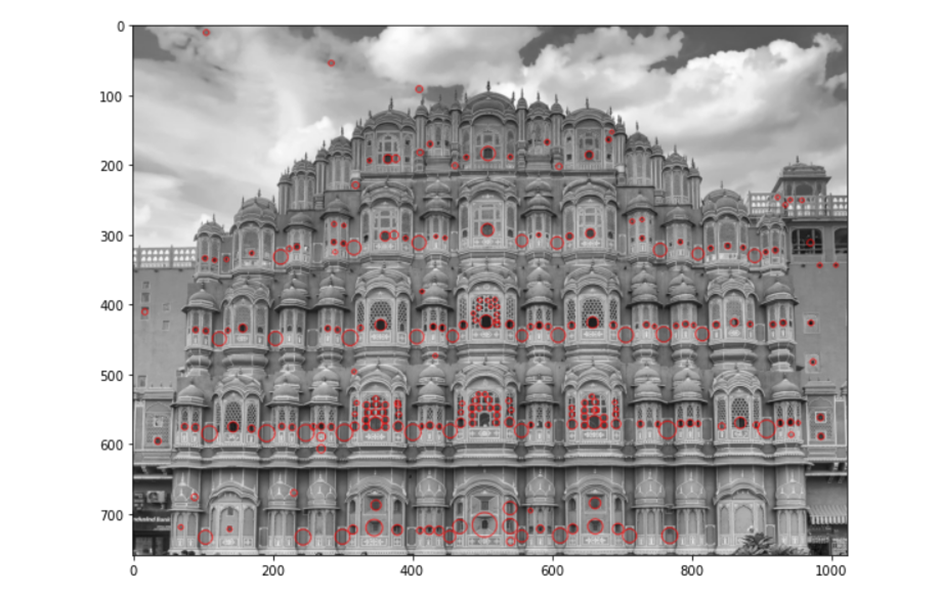

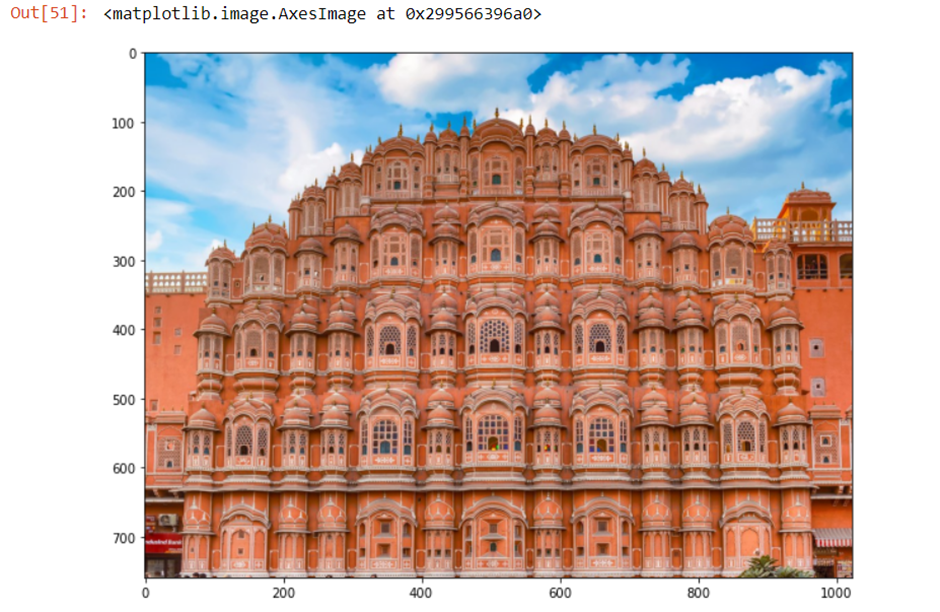

In this image people can see that there are some lines and circles in the image. The size of the feature is indicated by the size and shape of the circle, as well as its placement within it.

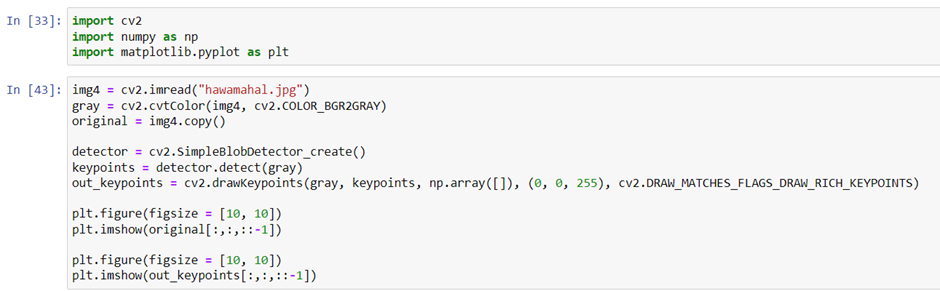

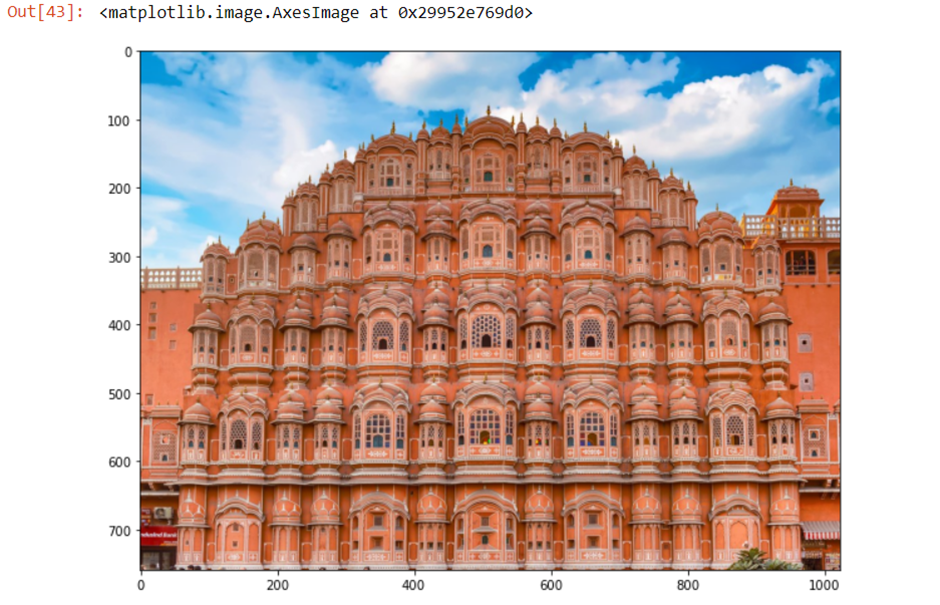

Detection of blobs

BLOB stands for Binary Large Object. It refers to a group of pixels or regions in a binary image that share a common property. These regions are contours in OpenCV with some extra features like centroid, color, area, a mean, and standard deviation of the pixel values in the covered region.

Feature Descriptor Algorithms

One feature of an image is typically a “key-point” that is memorable and bold. This point can be described with a descriptor, like adjectives or nouns. This algorithm can create a neighborhood around the point of input. It then takes the signature from this neighborhood and returns its value.

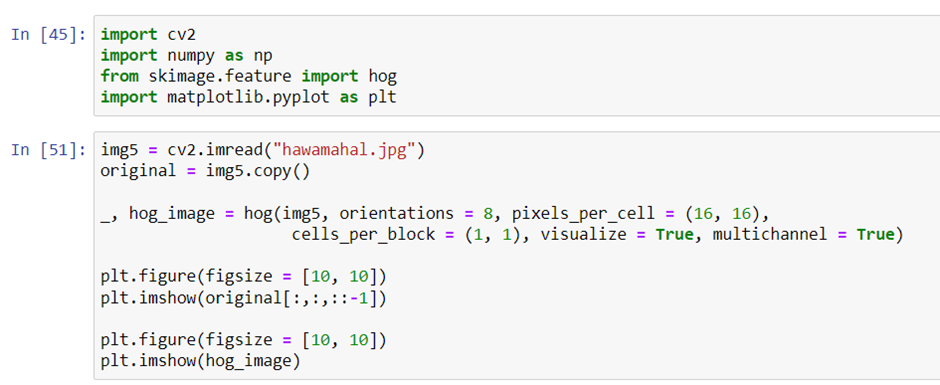

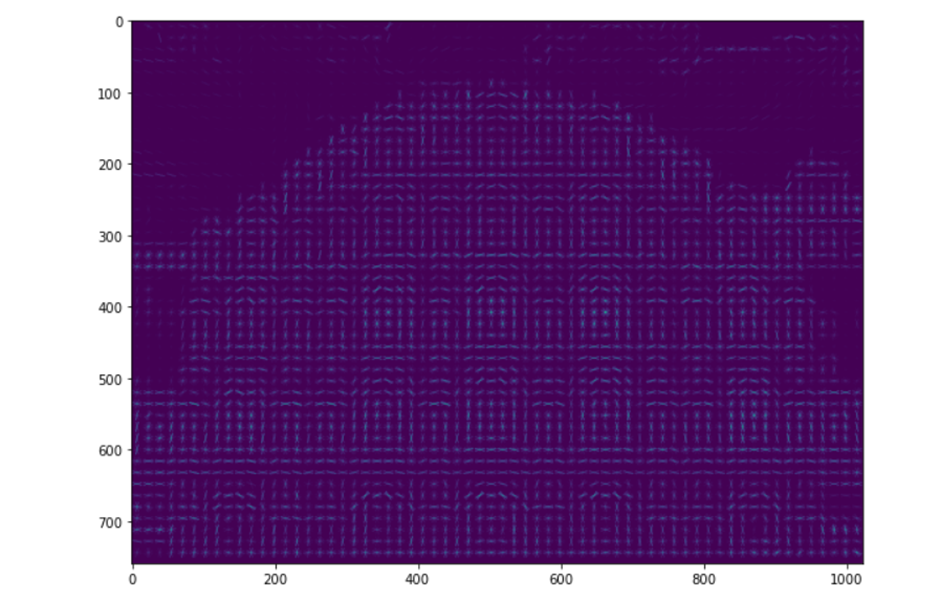

- Histogram of Oriented Gradients (HoG)

Feature descriptors are ways to identify objects in an image. In this case, the HoG algorithm is typically used to detect the object’s presence in an image that has been segmented into multiple regions by a computer. HoG places a square black and white mask over a small region of an image (usually in the range of 40-60 pixels), then uses the gradient tools to count the amount of certain colors that are visible within this rectilinear area.

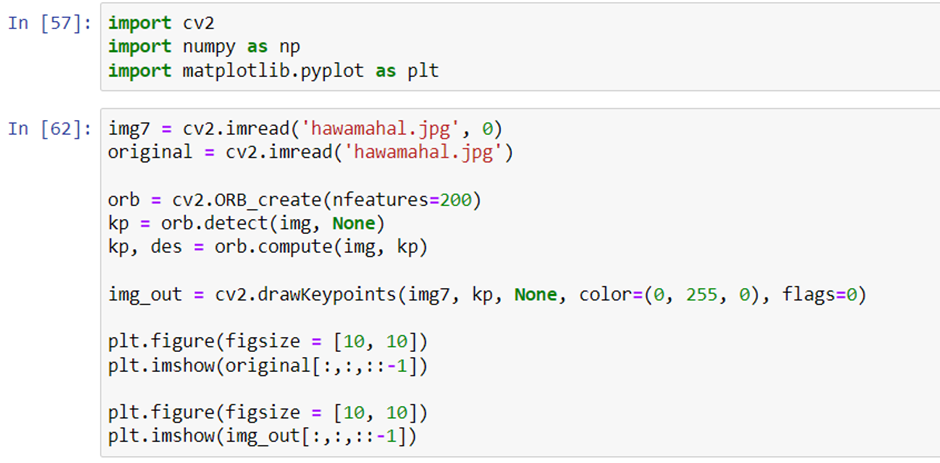

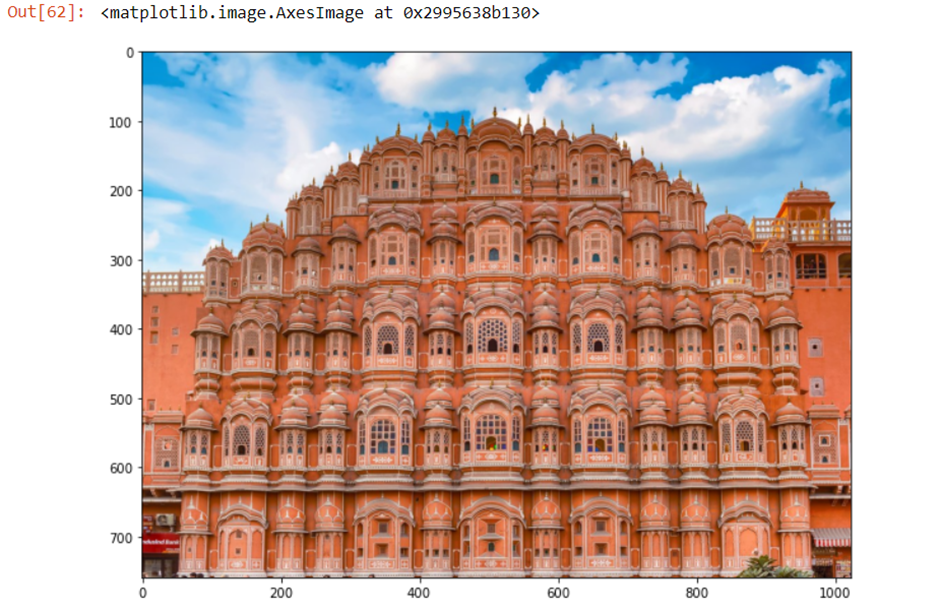

- Oriented FAST and Rotated BRIEF (ORB)

A facial recognition algorithm called ORB is currently being used in mobile phones and apps like Google photos. According to the images it analyses, the app groups people together into groups linked by the people they see. Algorithms are used to perform tasks without the need for any major calculations on a GPU, which is helpful for less powerful devices. This is an AI built to find people in photographs. It works by finding key points of a person’s face like the intensity variations and then matches them with other photos online.

Computer Vision for Beginners – Part 1

Computer Vision for Beginners – Part 2

Computer Vision for Beginners – Part 3

Computer Vision for Beginners – Part 5

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts