Mastering Keras: Best Practices for Optimizing Your Python Models

Naveen

Naveen- 0

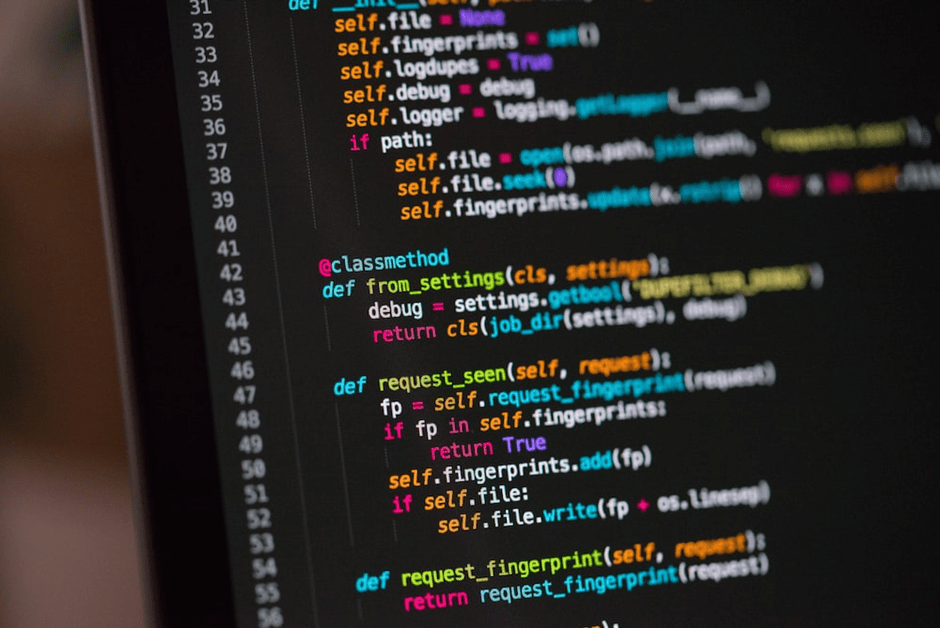

The popularity of Keras, a Python library for building and training deep learning models, has increased as deep learning evolves. It is known for its user-friendly API, which allows developers to quickly build, test, and deploy deep learning models. This article will cover the best practices for optimizing Keras models, including understanding Python Keras libraries, preprocessing data, selecting appropriate activation functions, optimizing with regularization techniques, improving model performance, avoiding common mistakes, and using advanced Keras techniques for deep learning models.

Introduction to Keras and its Importance in Deep Learning

Keras is a Python library that is open-source and provides a high-level API for building and training deep learning models. It was developed with a focus on enabling fast experimentation and prototyping of deep learning models. Keras is built on top of TensorFlow, which is a popular deep learning library developed by Google. Keras supports both CPU and GPU processing, making it suitable for both small and large-scale deep learning projects.

Keras is popular because of its user-friendly interface which enables developers to build and train neural networks with only a few lines of code. This makes it easy for developers to experiment with different architectures and hyperparameters which is important for deep learning projects.

Keras has a large community of developers who contribute to the development of the library. This means that there are many resources available for developers who are new to Keras, including documentation, tutorials, and code examples.

Best Practices for Optimizing Keras Models Understanding Python Keras Libraries and How They Work

Before you start building your Keras model, it’s important to understand the different Python Keras libraries and how they work. The most common libraries used in Keras are:

- keras.models: This library is used to define the architecture of your model. It includes different types of layers such as convolutional, pooling, and dense layers.

- keras.layers: This library includes different types of layers that you can use in your model. It includes activation layers, normalization layers, and dropout layers.

- keras.optimizers: This library includes different optimization algorithms that you can use to train your model. It includes stochastic gradient descent (SGD), Adam, and RMSprop.

- keras.losses: This library includes different loss functions that you can use to train your model. It includes mean squared error (MSE), categorical cross-entropy, and binary cross-entropy.

Preprocessing Your Data for Keras Models

Preprocessing your data is an important step in optimizing your Keras models. This involves cleaning, normalizing, and transforming your data to make it suitable for training your model. Some common preprocessing techniques include:

- Data Cleaning: This involves removing any irrelevant or missing data from your dataset. This can be done using techniques such as imputation or deletion.

- Data Normalization: This involves scaling your data so that it is within a specific range. This can be done using techniques such as min-max scaling or z-score normalization.

- Data Transformation: This involves transforming your data to make it suitable for training your model. This can be done using techniques such as one-hot encoding or feature scaling.

Choosing the Right Activation Functions for Your Keras Models

Activation functions play a crucial role in the performance of your Keras models. They are used to introduce non-linearity into your model, which is important for learning complex patterns in your data. Some popular activation functions used in Keras models include:

- ReLU: This is the most commonly used activation function in deep learning. It is simple and efficient, and has been shown to perform well in many applications.

- Sigmoid: This activation function is used for binary classification problems. It maps the output of your model to a probability value between 0 and 1.

- Softmax: This activation function is used for multi-class classification problems. It maps the output of your model to a probability distribution over multiple class.

Optimizing Your Keras Models with Regularization Techniques

Regularization techniques are used to prevent overfitting in your Keras models. Overfitting occurs when your model performs well on the training data, but poorly on the test data. This is a common problem in deep learning, and can be prevented using regularization techniques such as:

- L1 Regularization: This involves adding a penalty term to your loss function that is proportional to the absolute value of the weights in your model.

- L2 Regularization: This involves adding a penalty term to your loss function that is proportional to the square of the weights in your model.

- Dropout: This involves randomly dropping out some of the neurons in your model during training. This helps to prevent overfitting by introducing some randomness into the training process.

Tips for Improving Keras Model Performance

Here are some tips for improving the performance of your Keras models:

- Use Early Stopping: This involves monitoring the performance of your model on a validation dataset during training. If the performance does not improve for a certain number of epochs, training is stopped early to prevent overfitting.

- Use Batch Normalization: This involves normalizing the inputs to each layer in your model. This helps to improve the stability and performance of your model.

- Use Transfer Learning: This involves using pre-trained models as a starting point for your own model. This can save training time and improve the performance of your model.

Common Mistakes to Avoid When Working with Keras

Here are some common mistakes to avoid when working with Keras:

- Using Too Many Layers: Using too many layers in your model can lead to overfitting and poor performance. It’s important to use a small number of layers that are appropriate for your problem.

- Using Too Few Training Samples: Using too few training samples can lead to overfitting and poor performance. It’s important to have enough training data to learn the patterns in your data.

- Using the Wrong Activation Function: Using the wrong activation function can lead to poor performance. It’s important to choose the right activation function for your problem.

Advanced Keras Techniques for Deep Learning Models

Here are some advanced Keras techniques for deep learning models:

- Recurrent Neural Networks (RNNs): RNNs are used for sequential data, such as time series data or natural language processing.

- Convolutional Neural Networks (CNNs): CNNs are used for image and video data, and have been shown to perform well in many applications.

- Generative Adversarial Networks (GANs): GANs are used for generating new data that is similar to a training dataset.

Conclusion: The Future of Python Keras and Deep Learning

Python Keras is one of the most popular libraries for building and training deep learning models. It’s user-friendly interface and large community of developers make it a great choice for deep learning projects. As deep learning continues to evolve, we can expect to see new advances in Keras and other deep learning libraries. By following the best practices outlined in this article, you can optimize your Keras models and improve their performance on a wide range of applications.

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts