Generative AI Interview Questions 2025 | Ultimate Guide to Crack AI Interviews

Naveen

Naveen- 0

As generative AI technology continues to advance, roles like Gen AI Engineers and Gen AI Specialists are experiencing high demand. While Gen AI Engineers focus on designing, developing, and deploying AI models, Gen AI Specialists concentrate on applying these models to real-world use cases, aligning them with business objectives, and driving innovation.

Generative AI Salary Insights

The growing demand for Gen AI roles comes with attractive salary packages:

- Gen AI Engineers:

- India: ₹8 lakhs to ₹15 lakhs annually

- United States: $100,000 to $150,000 annually

- Gen AI Specialists:

- India: ₹12 lakhs to ₹25 lakhs annually

- United States: $120,000 annually

With these lucrative salaries, the competition for jobs is increasing. Preparing for interviews is crucial to succeed in this competitive landscape. Let’s dive into the top generative AI interview questions you should know.

Q1. How is Traditional AI is different from Generative AI ?

Understanding the differences between traditional AI and generative AI is fundamental:

- Traditional AI: Relies on predefined algorithms and primarily works with labeled supervised data to solve specific problems.

- Generative AI: Uses advanced learning techniques to understand data structures, train on datasets, and create new and innovative solutions. This ability to generate fresh outputs sets generative AI apart.

Comparison of Functioning:

- Traditional AI Workflow:

- Data collection

- Model selection

- Training

- Evaluation

- Feedback

- Deployment

- Generative AI Workflow:

- Data preprocessing

- AI model training

- Data generation

- Identifying patterns

- Iterative training (incorporates feedback)

- Deployment

Applications:

- Traditional AI: Image detection, stock market prediction, fraud detection, voice recognition.

- Generative AI: Content creation, predictive fashion trends, code generation, advancements in healthcare.

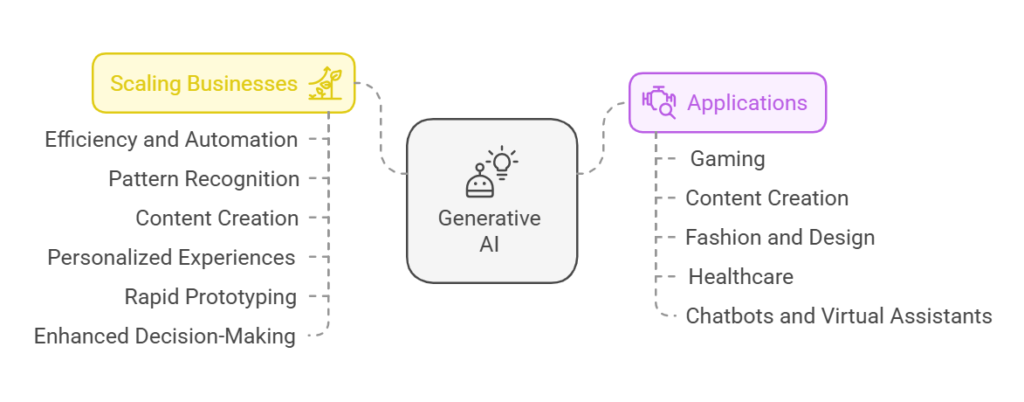

Q2. How does Generative AI helps in Scaling Businesses ?

Generative AI enables businesses to scale by automating processes, creating personalized customer experiences, and generating data-driven insights. Here’s how:

- Efficiency and Automation: Automates repetitive tasks like content creation, customer service via chatbots, and report generation.

- Pattern Recognition: Analyzes vast datasets to identify trends, optimize operations, and predict market shifts.

- Content Creation: Generates high-quality marketing materials and creative assets at scale.

- Personalized Experiences: Customizes interactions and recommendations to improve customer satisfaction and loyalty.

- Rapid Prototyping: Creates simulations, prototypes, and product designs, accelerating product development cycles.

- Enhanced Decision-Making: Provides actionable insights and forecasts, guiding businesses in resource allocation and market strategy.

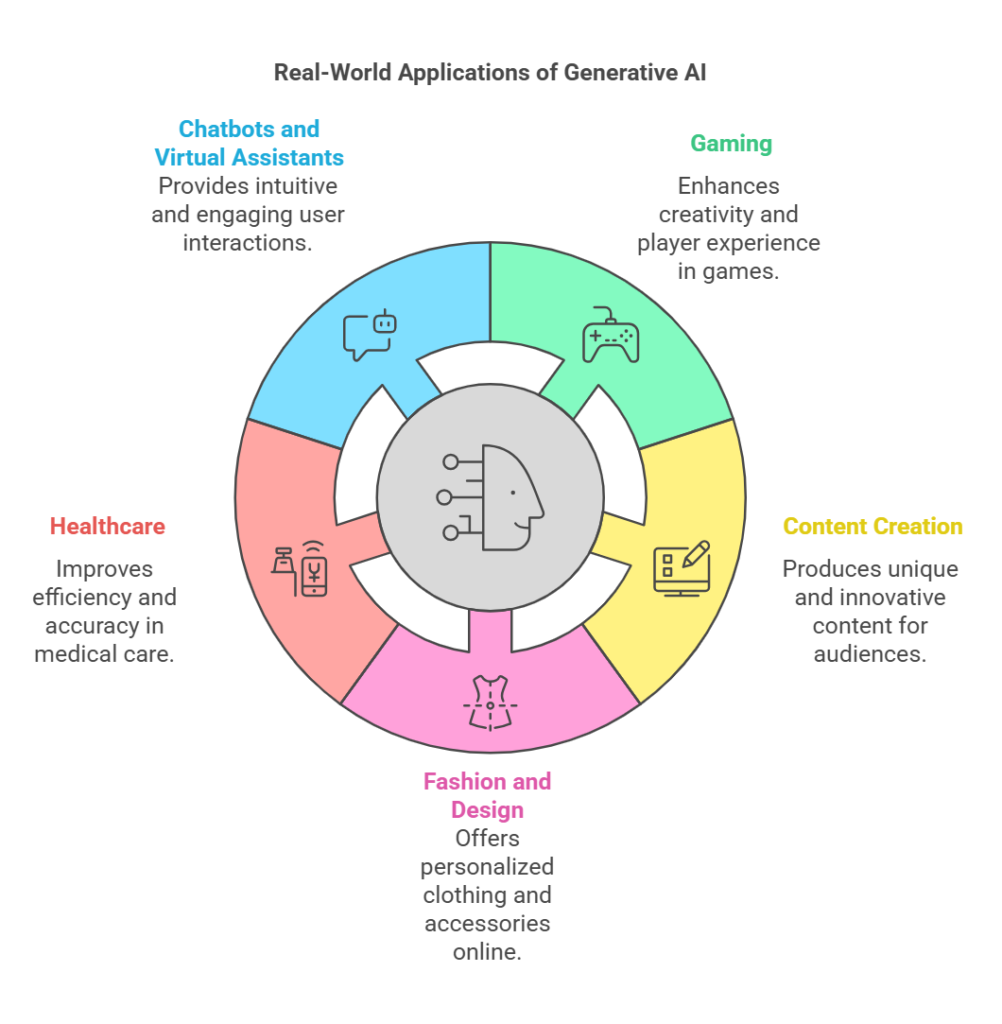

Q3. What are some application of Generative AI in real world ?

Generative AI has a wide range of applications in the real world, such as gaming.

In the gaming industry, companies like Nvidia and Ubisoft are utilizing generative AI to enhance various aspects of game development and player experiences. Generative AI is being used to boost creativity, improve screen time dynamics, refine shadow techniques, and elevate the overall player experience, making games more impressive and engaging.

Next, in content creation, companies like Microsoft Azure and OpenAI, the creators of ChatGPT, are developing advanced algorithms to generate unique and innovative content. This content not only captivates audiences but is also highly appreciated by critics, showcasing the transformative potential of generative AI in creative industries.

In fashion and design, companies like H&M Group and Nike are leveraging generative AI to offer personalized clothing, shoes, and accessories online. This innovative use of AI has brought significant advancements, enabling these brands to enhance customer experiences and set new standards in personalized fashion.

In the healthcare industry, companies like Atomwise and Insilico Medicine are utilizing generative AI to improve efficiency and accuracy in medical care. Given the critical importance of precision when it comes to saving lives, generative AI plays a pivotal role in advancing medical research, diagnosis, and treatment solutions.

Finally, in chatbots and virtual assistants, generative AI is being used to enhance user experiences by providing more accurate, intuitive, and engaging interactions.

Q4. What are some popular Generative AI models you know ?

The first one we have is GPT. GPT stands for Generative Pre-trained Transformer, a series of language models designed to generate human-like text based on pre-trained data, enabling tasks like text generation, translation, and even summarization.

Next, we have BERT (Bidirectional Encoder Representations from Transformers), a language model that understands context in both directions—left to right and right to left. This improves tasks such as question answering and language understanding.

The next generative AI model is DALL·E and DALL·E 2. These AI models are capable of generating images from textual descriptions, with DALL·E 2 offering enhanced image quality and more accurate interpretations of complex prompts.

Q5. What are some challenges associated with Generative AI ?

Some key challenges associated with generative AI include:

- Data Privacy and Security: The large datasets used for training generative AI models may compromise user privacy or include sensitive information, leading to regulatory and ethical concerns.

- Bias and Fairness: Generative AI can amplify biases present in the training data, resulting in outputs that are unfair or discriminatory.

- Quality and Accuracy: Ensuring the quality and factual accuracy of AI-generated content is challenging, especially when working with diverse and extensive datasets.

- Interpretability and Transparency: Generated content can lack traceability, making it difficult to explain or justify outputs. There is also the potential for outputs to closely resemble copyrighted material, raising legal and ethical concerns.

- Ethical Concerns and Misuse: Generative AI can be misused for malicious purposes, such as creating deep fakes, spreading misinformation, or automating spam, leading to serious ethical challenges.

- Resource Intensity: Training generative AI models requires significant computational resources, which leads to high costs and environmental concerns.

- Deployment Challenges: Ensuring robust, scalable, and safe deployment of generative AI models in real-world applications is complex.

Addressing these challenges requires a combination of technical solutions, ethical guidelines, and legal frameworks to ensure responsible and effective use of generative AI.

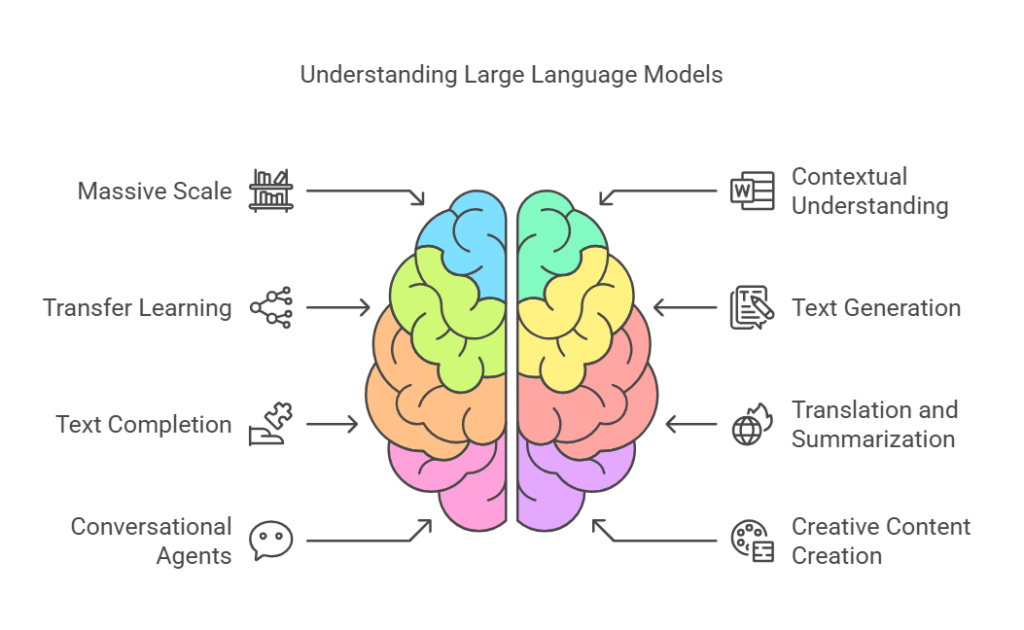

Q6. What is the Large Language Model and How it is applied in Generative AI ?

A Large Language Model (LLM) is a type of artificial intelligence model trained on vast amounts of text data to understand and generate human-like language. LLMs are typically built using deep learning architectures, such as transformer-based models like GPT, BERT, and T5. These models contain billions to trillions of parameters, enabling them to capture the nuances of natural language.

Key Features of LLMs:

- Massive Scale

LLMs are trained on extensive datasets from diverse domains, including books, articles, and websites. - Contextual Understanding

LLMs can understand the context of words, phrases, and sentences by processing long-range dependencies, enabling coherent and contextually relevant text generation. - Transfer Learning

Pre-trained LLMs can be fine-tuned on specific tasks or datasets, allowing them to perform a wide range of language-related tasks with minimal task-specific data.

Applications of LLMs in Generative AI

- Text Generation

LLMs can generate coherent and human-like text based on a given prompt. For example, models like GPT-3 can write articles, create dialogues, generate poetry, or even produce code. - Text Completion

LLMs can predict and complete sentences or paragraphs based on initial input, making them valuable for applications like auto-completion, chatbots, and writing assistance. - Translation and Summarization

LLMs can translate text between languages or summarize lengthy documents into concise summaries. - Conversational Agents

LLMs power conversational AI systems such as chatbots and virtual assistants, enabling dynamic and human-like interactions. - Creative Content Creation

LLMs are used to generate creative content, including marketing copy, articles, and even scripts for videos or films. - Sentiment Analysis and Classification

Fine-tuned LLMs can classify text for tasks like sentiment analysis or spam detection, predicting labels based on context and training data. - Code Generation

LLMs assist in programming by generating code snippets based on natural language descriptions.

Q7. What is Prompt Engineering and Why is it important in Generative AI ?

Prompt engineering is the process of designing and refining prompts to guide the output of generative AI models effectively. A prompt is the input or instruction provided to the model, which it uses to generate a response.

The quality, clarity, and structure of the prompt significantly influence the model’s output, making prompt engineering a critical skill for maximizing the performance and usefulness of AI models.

Key Objectives of Prompt Engineering:

- Improving Accuracy: Ensures precise and relevant responses.

- Enhancing Relevance: Tailors outputs to specific user needs.

- Increasing Efficiency: Streamlines processes for better performance.

Q8. How does Generative AI generate a text-based content ?

Generative AI creates text-based content through a series of key steps. Here’s a simplified explanation:

- Training on Large Datasets

Generative AI models, like GPT, are pre-trained on extensive datasets that include text from books, articles, and websites. This training helps the model learn language patterns, context, and general knowledge, forming the foundation for its ability to perform various language tasks effectively. - Model Architecture

These AI models are built on the transformer architecture, a highly efficient framework for processing sequential data. Transformers use mechanisms like self-attention to understand context and relationships within text, enabling capabilities such as language generation and comprehension. - Generating Responses

The generation process involves multiple stages, including structuring ideas, connecting relevant information, analyzing data, writing coherent content, and delivering the output in a meaningful and usable form. - Iterative Improvement

The model undergoes an iterative process where it is refined through additional training, receiving feedback, and making adjustments. This process helps enhance the model’s performance over time.

In summary, generative AI models, like GPT-4, create text by predicting word sequences based on learned patterns from extensive training data while maintaining context throughout the generation process. Examples of generative AI models include GPT-4, BERT, Transformers, and T5.

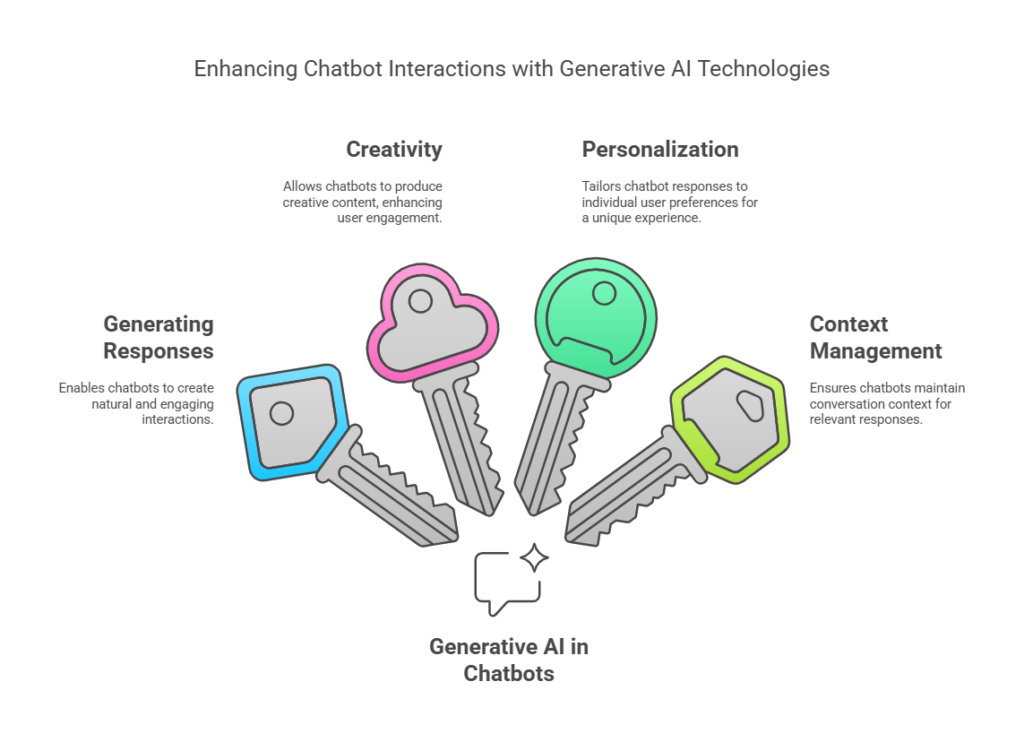

Q9. Can you explain how Generative AI is used in Chatbots and Language Translation ?

Generative AI enhances chatbots by enabling them to produce contextually relevant, coherent, and human-like responses, thereby improving the overall user experience. Key areas where generative AI contributes include:

- Generating Responses

By leveraging large language models, generative AI can create more natural and engaging responses, making chatbot interactions feel more human-like and conversational. - Creativity

Generative AI can produce creative content such as poems, stories, or scripts, which can be integrated into chatbot interactions to make conversations more entertaining and engaging. - Personalization

Generative AI can analyze user data to tailor chatbot responses, creating a more personalized experience that feels unique to each individual. - Context Management

Generative AI can maintain context throughout a conversation, enabling chatbots to understand and respond appropriately to the user’s evolving needs and preferences.

These capabilities are transforming the functionality of chatbots and language translation tools, making them more effective, user-friendly, and engaging.

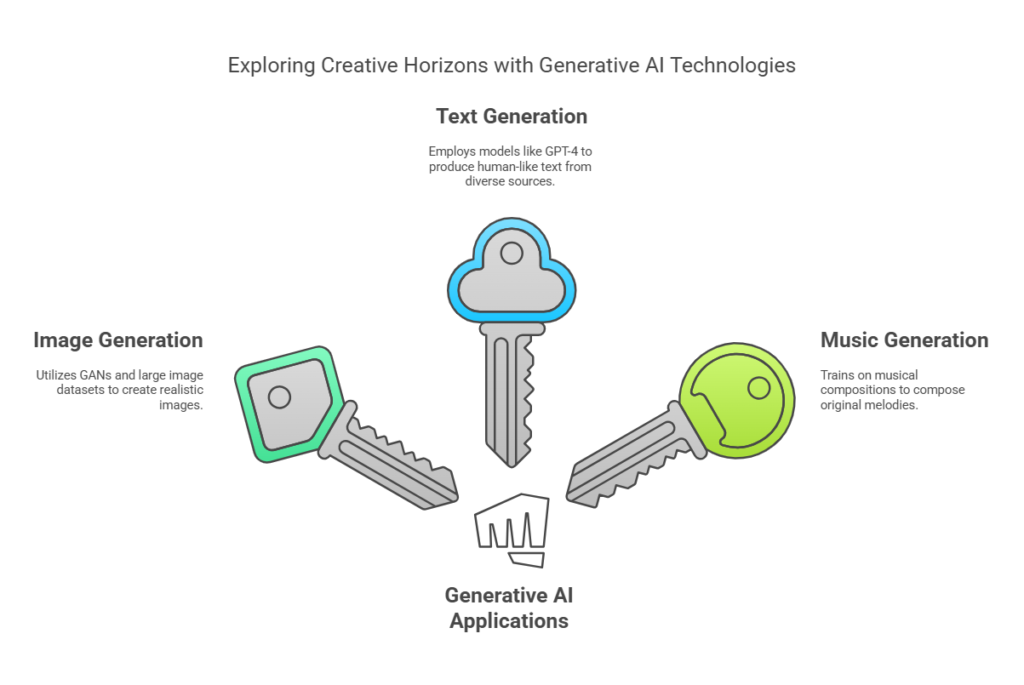

Q10. What is the role of data in training Generative AI models ?

Data plays a crucial role in training generative AI models, as the quality, quantity, and diversity of the data significantly impact the model’s performance and output. Here are some examples of data usage in generative AI models:

- Image Generation:

For models like GANs, large datasets of images are used to teach the models how to generate realistic and high-quality images. - Text Generation:

For models like GPT-4, text from various sources such as books, articles, and websites is used to train the model to understand and generate human-like text. - Music Generation:

Music generation models, trained on large collections of musical compositions, learn patterns in melody, harmony, and rhythm to create new and original music.

Conclusion

In this article we have discussed 10 questions which you should know if you are preparing for Generative AI interviews as Generative AI continues to evolve, understanding these concepts and their practical applications becomes important for professionals in the field.

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts