5 Tricks for Text Pre-processing in Machine Learning

Naveen

Naveen- 0

Text preprocessing is an important step in any machine learning project which involves processing text data. The quality of the preprocessing place an important role in performance of the model. In this article, we will discuss 5 tips for text preprocessing in machine learning to help improve the accuracy of the models.

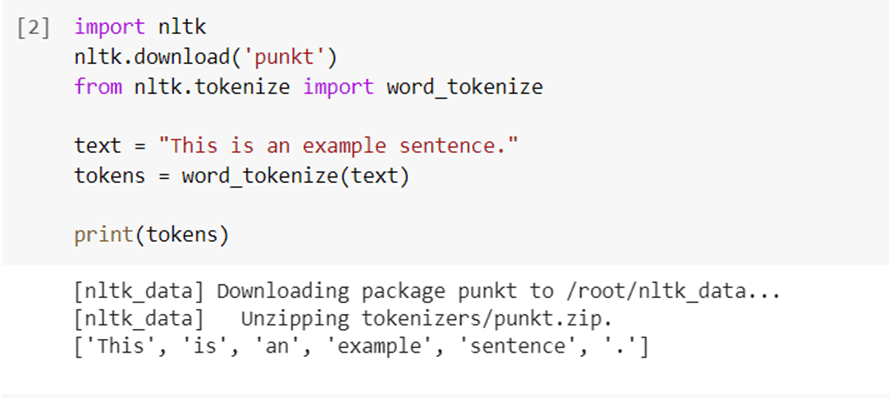

1 – Tokenization

Tokenization is the technique which we use to break down the sentences into words known as tokens. These tokens can be words, phrases, or even man or woman characters. Tokenization is the first step in most NLP Projects, and there are multiple libraries present in Python that can help you to tokenize your text.

Let’s see an example of how to implement tokenization in Python:

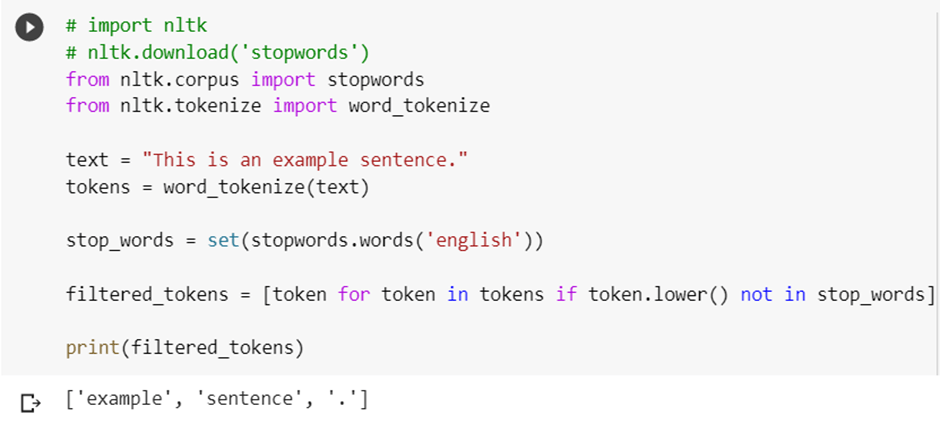

2 – Stop word removal

Stop words are common words that do not carry much meaning, such as “the”, “a”, “an”, etc. Removing these words from your text can help you reduce the noise and improve the accuracy of your models.

Let’s see an example of how to remove stop words from the text data:

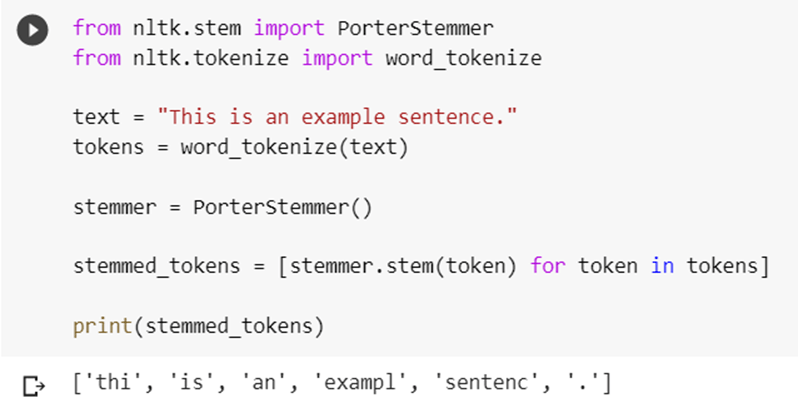

3 – Stemming

Stemming is the process of reducing words to their base or root form. This can help you reduce the number of unique words in your text and improve the accuracy of your models.

Let’s see an example of how to implement Stemming in Python:

4 – Lemmatization

Lemmatization is similar to stemming, but it reduces words to their base form based on their part of speech. This can help improve the accuracy of your models by preserving the meaning of the original words.

Let’s see an example of how to implement Lemmatization in Python:

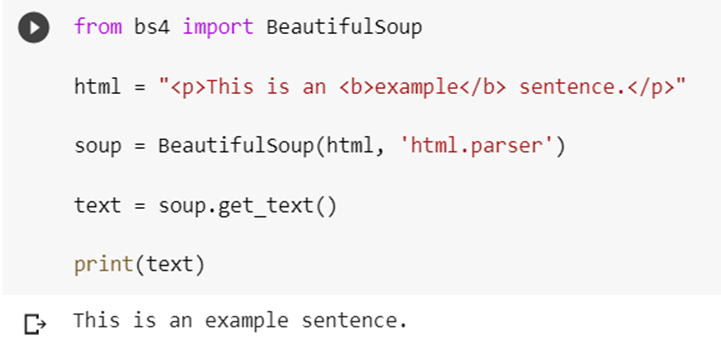

5 – Removing HTML tags

If you are working with web data, you may need to remove HTML tags from your text. This can be done with the help of regular expressions or a web scraping library like Beautiful Soup.

Let’s see an example of how to use regular expression or Beautiful soup in Python code:

Conclusion

In this article we have seen 5 tricks for text preproceessing in machine learning that can help you improve the accuracy of your models and get the better results. However, it’s important to remember that the optimal pre-processing steps may vary depending on your specific use case, so it’s always a good idea to experiment and try different techniques.

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts