What is precision, Recall, Accuracy and F1-score?

Naveen

Naveen- 5

Precision, Recall and Accuracy are three metrics that are used to measure the performance of a machine learning algorithm.

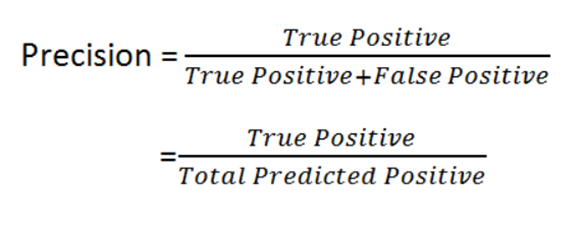

The Precision is the ratio of true positives over the sum of false positives and true negatives. It is also known as positive predictive value.

Precision is a useful metric and shows that out of those predicted as positive, how accurate the prediction was.

If the cost of a false sensitivity error is high, precision may be a good measure of accuracy. For example, email spam detection. In email spam detection, if an email that turns out not to be spam gets flagged as spam, then it’s as bad as it could get. Some email users might lose important emails if the precision for spam detection is not high enough.

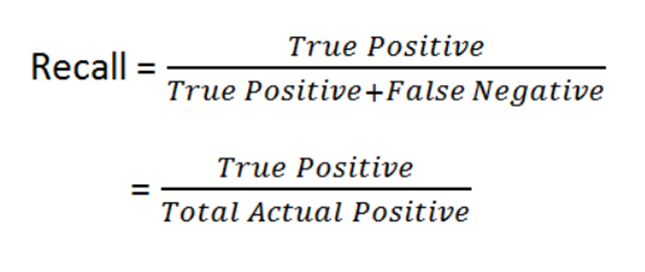

Recall

Recall is the ratio of correctly predicted outcomes to all predictions. It is also known as sensitivity or specificity.

Nice! So, Recall is just the proportion of positives our model are able to catch through labelling them as positives. When the cost of False Negative is greater than that of False Positive, we should select our best model using Recall.

For example, in the case of fraud detection. If a transaction which is predicted as fraudulent ends up being non-fraudulent for the bank, then there could be serious consequences.

One example is work in patient diagnosis. If a patient who tested positive (Actual Positive) is predicted as not sick (Predicted Negative). The cost associated with a False Negative can become extremely high, since contagious diseases are involved.

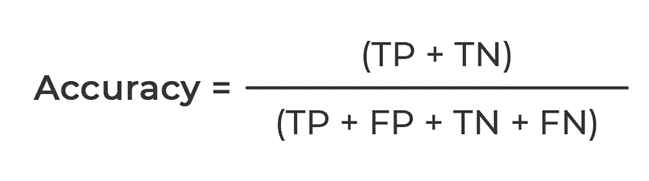

Accuracy

Accuracy is the ratio of correct predictions out of all predictions made by an algorithm. It can be calculated by dividing precision by recall or as 1 minus false negative rate (FNR) divided by false positive rate (FPR).

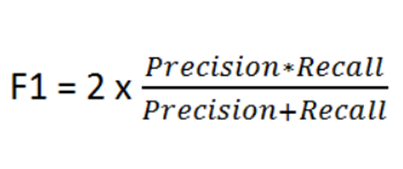

F1-score

The F1-score combines these three metrics into one single metric that ranges from 0 to 1 and it takes into account both Precision and Recall.

The F1 score is needed when accuracy and how many of your ads are shown are important to you. We’ve established that Accuracy means the percentage of positives and negatives identified correctly. Now, we’ll investigate “F1 Score,” which is a way to measure how much accuracy is present in your dataset. we tend not to focus on many different things when making decisions, whereas false positives and false negatives usually have both tangible and intangible costs for the business. The F1 score may be a better measure to use in those cases, as it balances precision and recall.

I hope these explanations will help those starting out on Data Science. Accuracy isn’t always the best measure to go by when you’re trying to find the model that will perform best.

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts

Good information. Lucky me I found your blog by chance.

I’ve saved it for later!

Way cool! Some extremely legitimate points! I appreciate you writing this declare and the blazing of the website is as well as truly good.

I have been browsing online greater than three hours as of late, yet I never found any fascinating article like yours.

It’s lovely value enough for me. In my opinion, if all

webmasters and bloggers made excellent content as

you probably did, the net will probably be much more useful than ever before.

I appreciate, cause I found just what I was looking for. You have ended my 4 day long hunt! God Bless you man. Have a great day. Bye

Thanks zoritoler