XGBoost: The Decision Tree-Based Ensemble Machine Learning Algorithm

Naveen

Naveen- 0

XGBoost is a data science algorithm that has revolutionized predictive modeling. Created in 2013 by Tianqi Chen and Guodong Ji, this machine learning algorithm has become popular due to its remarkable predictive capabilities. In this composition, we will delve into the intricacies of XGBoost, its essential attributes, and why it is a perfect option for predictive modeling.

XGBoost is an abstract machine that is founded on object-oriented programming. It deploys decision tree-based ensemble learning algorithms, which are multiple decision trees that collaborate to generate precise forecasts. These decision trees are dubbed base learners and are trained via a gradient boosting framework, implying that each subsequent decision tree learns from the faults of its predecessor. This improves the accuracy of the final prediction.

Why XGBoost is the Ideal Choice

XGBoost has garnered attention in recent years due to its exemplary performance on various prediction problems. It outperforms a myriad of algorithms, such as random forests, on structured and unstructured data. Here are some notable aspects of XGBoost that make it stand out:

Vast Spectrum of Applications: XGBoost can be utilized for regression, classification, ranking, and user-defined prediction problems, which makes it an all-inclusive tool that can be employed in numerous fields.

Transportability: XGBoost is portable and can be executed on Windows, Linux, or OS X. Therefore, you can run XGBoost on any platform that suits your needs.

Multiple Language Support: XGBoost supports various programming languages such as C++, Python, R, Java, Scala, and Julia, making it accessible to numerous users regardless of their preferred programming language.

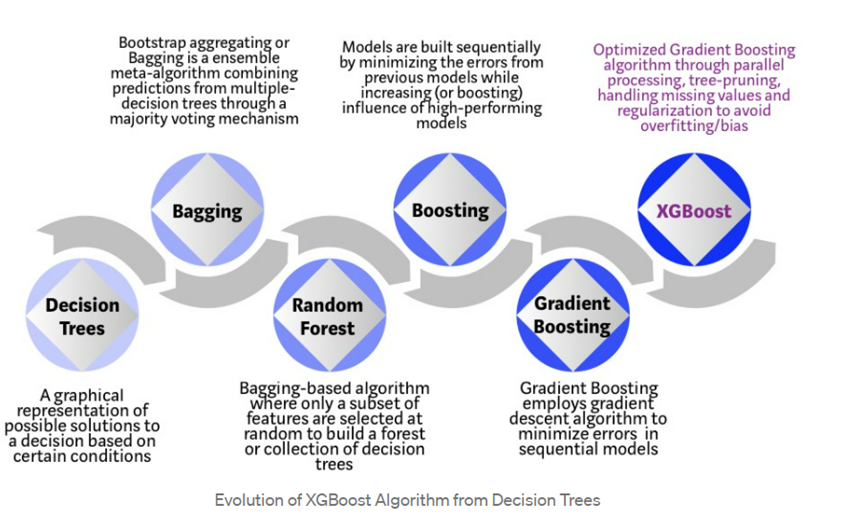

Progression of Decision Tree-Based Algorithms

Decision tree-based algorithms have been in existence for several decades and have undergone significant evolution over the years. Nowadays, they are the best option for small-to-medium structured/tabular data. Here is a brief summary of how decision tree-based algorithms have advanced over time:

CART: Classification And Regression Trees (CART) was the first decision tree-based algorithm created in the 1980s and was primarily used for binary classification problems.

ID3: Iterative Dichotomiser 3 (ID3) was an improvement over CART, developed in the 1990s. It could handle multi-class classification problems.

C4.5: C4.5 was an extension of ID3 that could handle both categorical and continuous variables.

Random Forests: Random forests were introduced in the early 2000s and are a type of decision tree-based ensemble learning algorithm. They are highly accurate and can handle large datasets.

XGBoost: XGBoost is the latest addition to the family of decision tree-based algorithms, introduced in 2013. It surpasses many other algorithms on a wide range of prediction problems.

Conclusion

XGBoost is an impressive machine learning algorithm that has gained popularity recently due to its superior performance on prediction problems. Its ability to handle structured and unstructured data, portability, and multiple language support make it an all-in-one tool for data scientists. Decision tree-based algorithms have undergone substantial evolution over the years, with XGBoost being the latest addition. As a data scientist, keeping up with the latest trends and advancements in the field of data science is vital.

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts